Large language fashions (LLMs) are extremely highly effective common reasoning instruments which might be helpful in a variety of conditions.

But working with LLMs presents challenges which might be completely different from constructing conventional software program:

- Calls are usually gradual, and streams generate output because it turns into obtainable.

- Instead of structured enter (one thing like JSON) with fastened parameters, they take unstructured and arbitrary pure language as enter. They are able to “understanding” subtleties of that language.

- They are non-deterministic. You might get completely different outputs even with the identical enter.

LangChain is a well-liked framework for creating LLM-powered apps. It was constructed with these and different elements in thoughts, and supplies a variety of integrations with closed-source mannequin suppliers (like OpenAI, Anthropic, and Google), open supply fashions, and different third-party parts like vectorstores.

This article will stroll by way of the basics of constructing with LLMs and LangChain’s Python library. The solely requirement is fundamental familiarity with Python, – no machine studying expertise wanted!

You’ll find out about:

Let’s dive in!

Project Setup

We advocate utilizing a Jupyter pocket book to run the code on this tutorial because it supplies a clear, interactive setting. See this web page for directions on setting it up domestically, or try Google Colab for an in-browser expertise.

The very first thing you may have to do is select which Chat Model you wish to use. If you have ever used an interface like ChatGPT earlier than, the fundamental concept of a Chat Model will probably be acquainted to you – the mannequin takes messages as enter, and returns messages as output. The distinction is that we’ll be doing it in code.

This information defaults to Anthropic and their Claude 3 Chat Models, however LangChain additionally has a wide selection of different integrations to select from, together with OpenAI fashions like GPT-4.

pip set up langchain_core langchain_anthropic

If you’re working in a Jupyter pocket book, you’ll have to prefix pip with a % image like this: %pip set up langchain_core langchain_anthropic.

You’ll additionally want an Anthropic API key, which you’ll be able to receive right here from their console. Once you could have it, set as an setting variable named ANTHROPIC_API_KEY:

export ANTHROPIC_API_KEY="..."

You can even move a key instantly into the mannequin for those who want.

First steps

You can initialize your mannequin like this:

from langchain_anthropic import ChatAnthropic

chat_model = ChatAnthropic(

mannequin="claude-3-sonnet-20240229",

temperature=0

)

# If you like to move your key explicitly

# chat_model = ChatAnthropic(

# mannequin="claude-3-sonnet-20240229",

# temperature=0,

# api_key="YOUR_ANTHROPIC_API_KEY"

# )The mannequin parameter is a string that matches considered one of Anthropic’s supported fashions. At the time of writing, Claude 3 Sonnet strikes a great stability between pace, price, and reasoning functionality.

temperature is a measure of the quantity of randomness the mannequin makes use of to generate responses. For consistency, on this tutorial, we set it to 0 however you possibly can experiment with increased values for artistic use circumstances.

Now, let’s attempt operating it:

chat_model.invoke("Tell me a joke about bears!")

Here’s the output:

AIMessage(content material="Here's a bear joke for you:nnWhy did the bear dissolve in water?nBecause it was a polar bear!")

You can see that the output is one thing known as an AIMessage. This is as a result of Chat Models use Chat Messages as enter and output.

Note: You had been in a position to move a easy string as enter within the earlier instance as a result of LangChain accepts a couple of types of comfort shorthand that it mechanically converts to the right format. In this case, a single string is changed into an array with a single HumanMessage.

LangChain additionally incorporates abstractions for pure text-completion LLMs, that are string enter and string output. But on the time of writing, the chat-tuned variants have overtaken LLMs in reputation. For instance, GPT-4 and Claude 3 are each Chat Models.

To illustrate what’s happening, you possibly can name the above with a extra specific checklist of messages:

from langchain_core.messages import HumanMessage

chat_model.invoke([

HumanMessage("Tell me a joke about bears!")

])

And you get the same output:

AIMessage(content material="Here's a bear joke for you:nnWhy did the bear deliver a briefcase to work?nHe was a enterprise bear!")

Prompt Templates

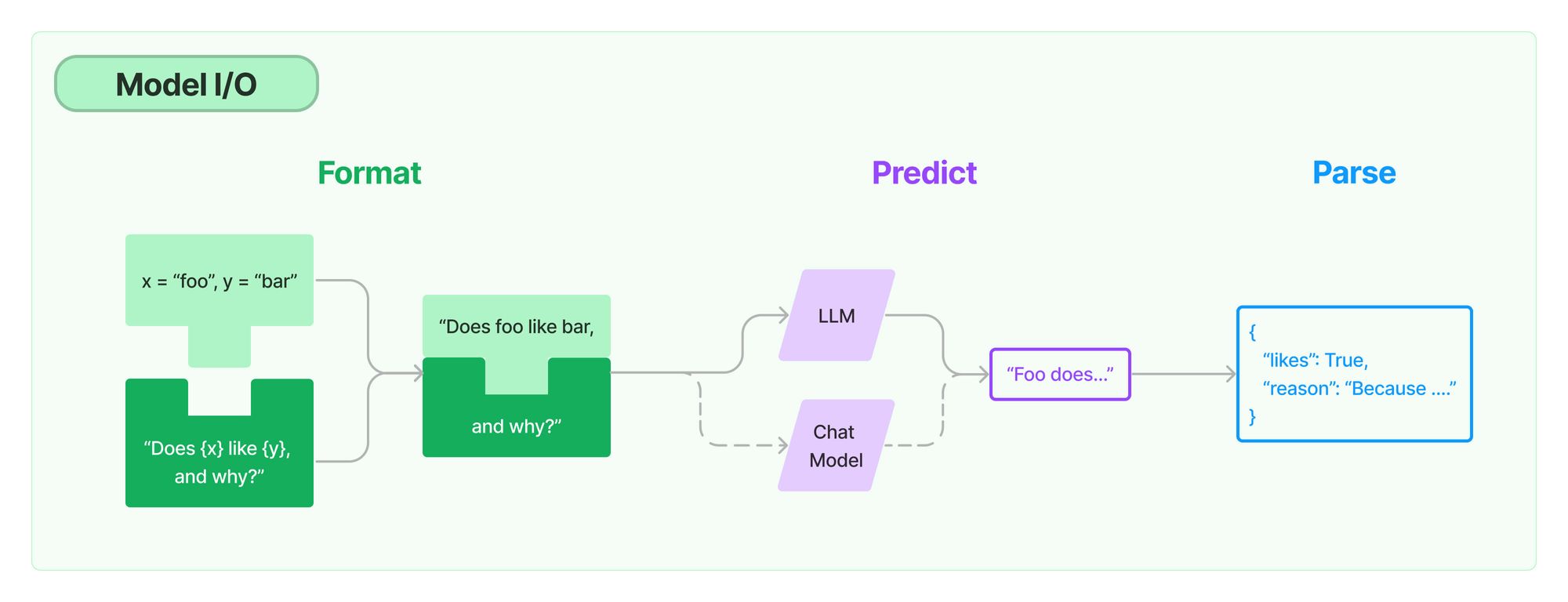

Models are helpful on their very own, nevertheless it’s typically handy to parameterize inputs so that you just don’t repeat boilerplate. LangChain supplies Prompt Templates for this function. A easy instance could be one thing like this:

from langchain_core.prompts import ChatPromptTemplate

joke_prompt = ChatPromptTemplate.from_messages([

("system", "You are a world class comedian."),

("human", "Tell me a joke about {topic}")

])

You can apply the templating utilizing the identical .invoke() technique as with Chat Models:

joke_prompt.invoke({"subject": "beets"})

Here’s the outcome:

ChatPromptWorth(messages=[

SystemMessage(content="You are a world class comedian."),

HumanMessage(content="Tell me a joke about beets")

])

Let’s go over every step:

- You assemble a immediate template consisting of templates for a

SystemMessageand aHumanMessageutilizingfrom_messages. - You can consider

SystemMessagesas meta-instructions that aren’t half of the present dialog, however purely information enter. - The immediate template incorporates

{subject}in curly braces. This denotes a required parameter named"subject". - You invoke the immediate template with a dict with a key named

"subject"and a worth"beets". - The outcome incorporates the formatted messages.

Next, you may learn to use this immediate template along with your Chat Model.

Chaining

You might have seen that each the Prompt Template and Chat Model implement the .invoke() technique. In LangChain phrases, they’re each cases of Runnables.

You can compose Runnables into “chains” utilizing the pipe (|) operator the place you .invoke() the subsequent step with the output of the earlier one. Here’s an instance:

chain = joke_prompt | chat_model

The ensuing chain is itself a Runnable and mechanically implements .invoke() (in addition to a number of different strategies, as we’ll see later). This is the muse of LangChain Expression Language (LCEL).

Let’s invoke this new chain:

chain.invoke({"subject": "beets"})

The chain returns a joke whose subject is beets:

AIMessage(content material="Here's a beet joke for you:nnWhy did the beet blush? Because it noticed the salad dressing!")

Now, let’s say you wish to work with simply the uncooked string output of the message. LangChain has a element known as an Output Parser, which, because the identify implies, is answerable for parsing the output of a mannequin right into a extra accessible format. Since composed chains are additionally Runnable, you possibly can once more use the pipe operator:

from langchain_core.output_parsers import StrOutputParser

str_chain = chain | StrOutputParser()

# Equivalent to:

# str_chain = joke_prompt | chat_model | StrOutputParser()

Cool! Now let’s invoke it:

str_chain.invoke({"subject": "beets"})

And the result’s now a string as we’d hoped:

"Here's a beet joke for you:nnWhy did the beet blush? Because it noticed the salad dressing!"

You nonetheless move {"subject": "beets"} as enter to the brand new str_chain as a result of the primary Runnable within the sequence continues to be the Prompt Template you declared earlier than.

Streaming

One of the most important benefits to composing chains with LCEL is the streaming expertise.

All Runnables implement the .stream()technique (and .astream() for those who’re working in async environments), together with chains. This technique returns a generator that may yield output as quickly because it’s obtainable, which permits us to get output as rapidly as potential.

While each Runnable implements .stream(), not all of them assist a number of chunks. For instance, for those who name .stream() on a Prompt Template, it should simply yield a single chunk with the identical output as .invoke().

You can iterate over the output utilizing for ... in syntax. Try it with the str_chain you simply declared:

for chunk in str_chain.stream({"subject": "beets"}):

print(chunk, finish="|")

And you get a number of strings as output (chunks are separated by a | character within the print perform):

Here|'s| a| b|eet| joke| for| you|:|

Why| did| the| b|eet| bl|ush|?| Because| it| noticed| the| sal|advert| d|ressing|!|

Chains composed like str_chain will begin streaming as early as potential, which on this case is the Chat Model within the chain.

Some Output Parsers (just like the StrOutputParser used right here) and plenty of LCEL Primitives are in a position to course of streamed chunks from earlier steps as they’re generated – primarily appearing as remodel streams or passthroughs – and don’t disrupt streaming.

How to Guide Generation with Context

LLMs are educated on giant portions of knowledge and have some innate “information” of varied subjects. Still, it’s widespread to move the mannequin non-public or extra particular information as context when answering to glean helpful data or insights. If you have heard the time period “RAG”, or “retrieval-augmented technology” earlier than, that is the core precept behind it.

One of the best examples of that is telling the LLM what the present date is. Because LLMs are snapshots of when they’re educated, they’ll’t natively decide the present time. Here’s an instance:

chat_model = ChatAnthropic(model_name="claude-3-sonnet-20240229")

chat_model.invoke("What is the present date?")

The response:

AIMessage(content material="Unfortunately, I do not even have an idea of the present date and time. As an AI assistant with out an built-in calendar, I haven't got a dynamic sense of the current date. I can give you as we speak's date based mostly on once I was given my coaching information, however that won't mirror the precise present date you are asking about.")

Now, let’s see what occurs while you give the mannequin the present date as context:

from datetime import date

immediate = ChatPromptTemplate.from_messages([

("system", 'You know that the current date is "{current_date}".'),

("human", "{question}")

])

chain = immediate | chat_model | StrOutputParser()

chain.invoke({

"query": "What is the present date?",

"current_date": date.as we speak()

})

And you possibly can see, the mannequin generates the present date:

"The present date is 2024-04-05."

Nice! Now, let’s take it a step additional. Language fashions are educated on huge portions of knowledge, however they do not know all the things. Here’s what occurs for those who instantly ask the Chat Model a really particular query a couple of native restaurant:

chat_model.invoke(

"What was the Old Ship Saloon's complete income in Q1 2023?"

)The mannequin would not know the reply natively, and even know which of the numerous Old Ship Saloons on this planet we could also be speaking about:

AIMessage(content material="I'm sorry, I haven't got any particular monetary information concerning the Old Ship Saloon's income in Q1 2023. As an AI assistant with out entry to the saloon's inside data, I haven't got details about their future projected revenues. I can solely present responses based mostly on factual data that has been supplied to me.")However, if we may give the mannequin extra context, we will information it to give you a great reply:

SOURCE = """

Old Ship Saloon 2023 quarterly income numbers:

Q1: $174782.38

Q2: $467372.38

Q3: $474773.38

This autumn: $389289.23

"""

rag_prompt = ChatPromptTemplate.from_messages([

("system", 'You are a helpful assistant. Use the following context when responding:nn{context}.'),

("human", "{question}")

])

rag_chain = rag_prompt | chat_model | StrOutputParser()

rag_chain.invoke({

"query": "What was the Old Ship Saloon's complete income in Q1 2023?",

"context": SOURCE

})

This time, here is the outcome:

"According to the supplied context, the Old Ship Saloon's income in Q1 2023 was $174,782.38."The outcome appears good! Note that augmenting technology with extra context is a really deep subject – in the actual world, this could possible take the type of an extended monetary doc or portion of a doc retrieved from another information supply. RAG is a strong method to reply questions over giant portions of knowledge.

You can try LangChain’s retrieval-augmented technology (RAG) docs to be taught extra.

Debugging

Because LLMs are non-deterministic, it turns into increasingly more essential to see the internals of what’s happening as your chains get extra complicated.

LangChain has a set_debug() technique that may return extra granular logs of the chain internals: Let’s see it with the above instance:

from langchain.globals import set_debug

set_debug(True)

from datetime import date

immediate = ChatPromptTemplate.from_messages([

("system", 'You know that the current date is "{current_date}".'),

("human", "{question}")

])

chain = immediate | chat_model | StrOutputParser()

chain.invoke({

"query": "What is the present date?",

"current_date": date.as we speak()

})

There’s much more data!

[chain/start] [1:chain:RunnableSequence] Entering Chain run with enter:

[inputs]

[chain/start] [1:chain:RunnableSequence > 2:prompt:ChatPromptTemplate] Entering Prompt run with enter:

[inputs]

[chain/end] [1:chain:RunnableSequence > 2:prompt:ChatPromptTemplate] [1ms] Exiting Prompt run with output:

[outputs]

[llm/start] [1:chain:RunnableSequence > 3:llm:ChatAnthropic] Entering LLM run with enter:

{

"prompts": [

"System: You know that the current date is "2024-04-05".nHuman: What is the current date?"

]

}

...

[chain/end] [1:chain:RunnableSequence] [885ms] Exiting Chain run with output:

{

"output": "The present date you supplied is 2024-04-05."

}

You can see this information for extra data on debugging.

You can even use the astream_events() technique to return this information. This is helpful if you wish to use intermediate steps in your software logic. Note that that is an async technique, and requires an additional model flag because it’s nonetheless in beta:

# Turn off debug mode for readability

set_debug(False)

stream = chain.astream_events({

"query": "What is the present date?",

"current_date": date.as we speak()

}, model="v1")

async for occasion in stream:

print(occasion)

print("-----")

{'occasion': 'on_chain_start', 'run_id': '90785a49-987e-46bf-99ea-d3748d314759', 'identify': 'RunnableSequence', 'tags': [], 'metadata': {}, 'information': {'enter': {'query': 'What is the present date?', 'current_date': datetime.date(2024, 4, 5)}}}

-----

{'occasion': 'on_prompt_start', 'identify': 'ChatPromptTemplate', 'run_id': '54b1f604-6b2a-48eb-8b4e-c57a66b4c5da', 'tags': ['seq:step:1'], 'metadata': {}, 'information': {'enter': {'query': 'What is the present date?', 'current_date': datetime.date(2024, 4, 5)}}}

-----

{'occasion': 'on_prompt_end', 'identify': 'ChatPromptTemplate', 'run_id': '54b1f604-6b2a-48eb-8b4e-c57a66b4c5da', 'tags': ['seq:step:1'], 'metadata': {}, 'information': {'enter': {'query': 'What is the present date?', 'current_date': datetime.date(2024, 4, 5)}, 'output': ChatPromptWorth(messages=[SystemMessage(content="You know that the current date is "2024-04-05"."), HumanMessage(content="What is the current date?")])}

-----

{'occasion': 'on_chat_model_start', 'identify': 'ChatAnthropic', 'run_id': 'f5caa4c6-1b51-49dd-b304-e9b8e176623a', 'tags': ['seq:step:2'], 'metadata': {}, 'information': {'enter': {'messages': [[SystemMessage(content="You know that the current date is "2024-04-05"."), HumanMessage(content="What is the current date?")]]}}}

-----

...

{'occasion': 'on_chain_end', 'identify': 'RunnableSequence', 'run_id': '90785a49-987e-46bf-99ea-d3748d314759', 'tags': [], 'metadata': {}, 'information': {'output': 'The present date is 2024-04-05.'}}

-----Finally, you need to use an exterior service like LangSmith so as to add tracing. Here’s an instance:

# Sign up at <https://smith.langchain.com/>

# Set setting variables

# import os

# os.environ["LANGCHAIN_TRACING_V2"] = "true"

# os.environ["LANGCHAIN_API_KEY"] = "YOUR_KEY"

# os.environ["LANGCHAIN_PROJECT"] = "YOUR_PROJECT"

chain.invoke({

"query": "What is the present date?",

"current_date": date.as we speak()

})

"The present date is 2024-04-05."

LangSmith will seize the internals at every step, supplying you with a outcome like this.

You can even tweak prompts and rerun mannequin calls in a playground. Due to the non-deterministic nature of LLMs, it’s also possible to

Thank you!

You’ve now discovered the fundamentals of:

- LangChain’s Chat Model, Prompt Template, and Output Parser parts

- How to chain parts along with streaming.

- Using exterior data to information mannequin technology.

- How to debug the internals of your chains.

Check out the next for some good assets to proceed your generative AI journey:

You can even observe LangChain on X (previously Twitter) @LangChainAI for the most recent information, or me @Hacubu.

Happy prompting!