The previous few years has witnessed a fast development within the efficiency, effectivity, and generative capabilities of rising novel AI generative fashions that leverage in depth datasets, and 2D diffusion era practices. Today, generative AI fashions are extraordinarily able to producing completely different types of 2D, and to some extent, 3D media content material together with textual content, photographs, movies, GIFs, and extra.

In this text, we will probably be speaking concerning the Zero123++ framework, an image-conditioned diffusion generative AI mannequin with the purpose to generate 3D-consistent multiple-view photographs utilizing a single view enter. To maximize the benefit gained from prior pretrained generative fashions, the Zero123++ framework implements quite a few coaching and conditioning schemes to reduce the quantity of effort it takes to finetune from off-the-shelf diffusion picture fashions. We will probably be taking a deeper dive into the structure, working, and the outcomes of the Zero123++ framework, and analyze its capabilities to generate constant multiple-view photographs of top quality from a single picture. So let’s get began.

The Zero123++ framework is an image-conditioned diffusion generative AI mannequin that goals to generate 3D-consistent multiple-view photographs utilizing a single view enter. The Zero123++ framework is a continuation of the Zero123 or Zero-1-to-3 framework that leverages zero-shot novel view picture synthesis method to pioneer open-source single-image -to-3D conversions. Although the Zero123++ framework delivers promising efficiency, the photographs generated by the framework have seen geometric inconsistencies, and it is the principle motive why the hole between 3D scenes, and multi-view photographs nonetheless exists.

The Zero-1-to-3 framework serves as the muse for a number of different frameworks together with SyncDreamer, One-2-3-45, Consistent123, and extra that add further layers to the Zero123 framework to acquire extra constant outcomes when producing 3D photographs. Other frameworks like ProlificDreamer, DreamFusion, DreamGaussian, and extra comply with an optimization-based strategy to acquire 3D photographs by distilling a 3D picture from numerous inconsistent fashions. Although these strategies are efficient, and so they generate passable 3D photographs, the outcomes may very well be improved with the implementation of a base diffusion mannequin able to producing multi-view photographs constantly. Accordingly, the Zero123++ framework takes the Zero-1 to-3, and finetunes a brand new multi-view base diffusion mannequin from Stable Diffusion.

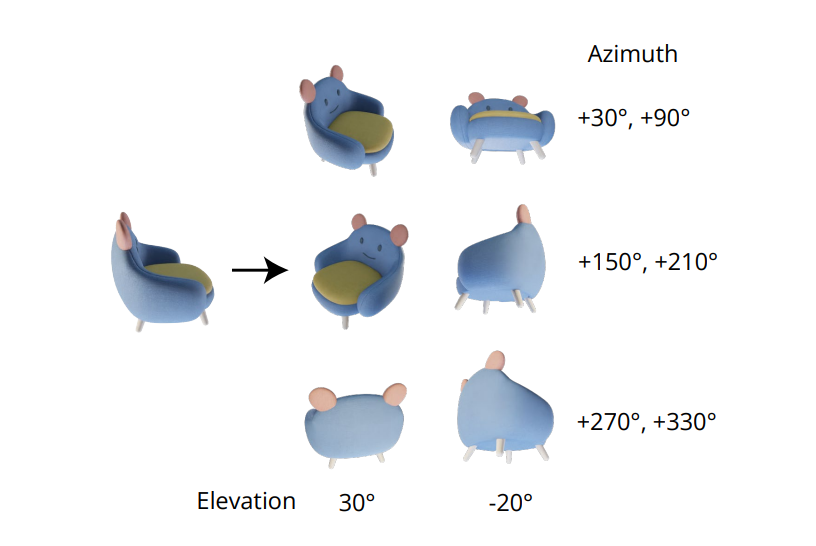

In the zero-1-to-3 framework, every novel view is independently generated, and this strategy results in inconsistencies between the views generated as diffusion fashions have a sampling nature. To sort out this situation, the Zero123++ framework adopts a tiling structure strategy, with the item being surrounded by six views right into a single picture, and ensures the proper modeling for the joint distribution of an object’s multi-view photographs.

Another main problem confronted by builders engaged on the Zero-1-to-3 framework is that it underutilizes the capabilities provided by Stable Diffusion that finally results in inefficiency, and added prices. There are two main explanation why the Zero-1-to-3 framework can’t maximize the capabilities provided by Stable Diffusion

- When coaching with picture situations, the Zero-1-to-3 framework doesn’t incorporate native or international conditioning mechanisms provided by Stable Diffusion successfully.

- During coaching, the Zero-1-to-3 framework makes use of diminished decision, an strategy by which the output decision is diminished under the coaching decision that may scale back the standard of picture era for Stable Diffusion fashions.

To sort out these points, the Zero123++ framework implements an array of conditioning strategies that maximizes the utilization of assets provided by Stable Diffusion, and maintains the standard of picture era for Stable Diffusion fashions.

Improving Conditioning and Consistencies

In an try to enhance picture conditioning, and multi-view picture consistency, the Zero123++ framework applied completely different strategies, with the first goal being reusing prior strategies sourced from the pretrained Stable Diffusion mannequin.

Multi-View Generation

The indispensable high quality of producing constant multi-view photographs lies in modeling the joint distribution of a number of photographs accurately. In the Zero-1-to-3 framework, the correlation between multi-view photographs is ignored as a result of for each picture, the framework fashions the conditional marginal distribution independently and individually. However, within the Zero123++ framework, builders have opted for a tiling structure strategy that tiles 6 photographs right into a single body/picture for constant multi-view era, and the method is demonstrated within the following picture.

Furthermore, it has been observed that object orientations are inclined to disambiguate when coaching the mannequin on digicam poses, and to stop this disambiguation, the Zero-1-to-3 framework trains on digicam poses with elevation angles and relative azimuth to the enter. To implement this strategy, it’s essential to know the elevation angle of the view of the enter that’s then used to find out the relative pose between novel enter views. In an try to know this elevation angle, frameworks usually add an elevation estimation module, and this strategy usually comes at the price of further errors within the pipeline.

Noise Schedule

Scaled-linear schedule, the unique noise schedule for Stable Diffusion focuses totally on native particulars, however as it may be seen within the following picture, it has only a few steps with decrease SNR or Signal to Noise Ratio.

These steps of low Signal to Noise Ratio happen early throughout the denoising stage, a stage essential for figuring out the worldwide low-frequency construction. Reducing the variety of steps throughout the denoising stage, both throughout interference or coaching usually leads to a higher structural variation. Although this setup is good for single-image era it does restrict the power of the framework to make sure international consistency between completely different views. To overcome this hurdle, the Zero123++ framework finetunes a LoRA mannequin on the Stable Diffusion 2 v-prediction framework to carry out a toy process, and the outcomes are demonstrated under.

With the scaled-linear noise schedule, the LoRA mannequin doesn’t overfit, however solely whitens the picture barely. Conversely, when working with the linear noise schedule, the LoRA framework generates a clean picture efficiently regardless of the enter immediate, thus signifying the influence of noise schedule on the power of the framework to adapt to new necessities globally.

Scaled Reference Attention for Local Conditions

The single view enter or the conditioning photographs within the Zero-1-to-3 framework is concatenated with the noisy inputs within the characteristic dimension to be noised for picture conditioning.

This concatenation results in an incorrect pixel-wise spatial correspondence between the goal picture, and the enter. To present correct native conditioning enter, the Zero123++ framework makes use of a scaled Reference Attention, an strategy by which working a denoising UNet mannequin is referred on an additional reference picture, adopted by the appendation of worth matrices and self-attention key from the reference picture to the respective consideration layers when the mannequin enter is denoised, and it’s demonstrated within the following determine.

The Reference Attention strategy is able to guiding the diffusion mannequin to generate photographs sharing resembling texture with the reference picture, and semantic content material with none finetuning. With effective tuning, the Reference Attention strategy delivers superior outcomes with the latent being scaled.

Global Conditioning : FlexDiffuse

In the unique Stable Diffusion strategy, the textual content embeddings are the one supply for international embeddings, and the strategy employs the CLIP framework as a textual content encoder to carry out cross-examinations between the textual content embeddings, and the mannequin latents. Resultantly, builders are free to make use of the alignment between the textual content areas, and the resultant CLIP photographs to make use of it for international picture conditionings.

The Zero123++ framework proposes to utilize a trainable variant of the linear steerage mechanism to include the worldwide picture conditioning into the framework with minimal fine-tuning wanted, and the outcomes are demonstrated within the following picture. As it may be seen, with out the presence of a worldwide picture conditioning, the standard of the content material generated by the framework is passable for seen areas that correspond to the enter picture. However, the standard of the picture generated by the framework for unseen areas witnesses vital deterioration which is principally due to the mannequin’s lack of ability to deduce the item’s international semantics.

Model Architecture

The Zero123++ framework is educated with the Stable Diffusion 2v-model as the muse utilizing the completely different approaches and strategies talked about within the article. The Zero123++ framework is pre-trained on the Objaverse dataset that’s rendered with random HDRI lighting. The framework additionally adopts the phased coaching schedule strategy used within the Stable Diffusion Image Variations framework in an try to additional reduce the quantity of fine-tuning required, and protect as a lot as doable within the prior Stable Diffusion.

The working or structure of the Zero123++ framework will be additional divided into sequential steps or phases. The first part witnesses the framework fine-tune the KV matrices of cross-attention layers, and the self-attention layers of Stable Diffusion with AdamW as its optimizer, 1000 warm-up steps and the cosine studying fee schedule maximizing at 7×10-5. In the second part, the framework employs a extremely conservative fixed studying fee with 2000 heat up units, and employs the Min-SNR strategy to maximise the effectivity throughout the coaching.

Zero123++ : Results and Performance Comparison

Qualitative Performance

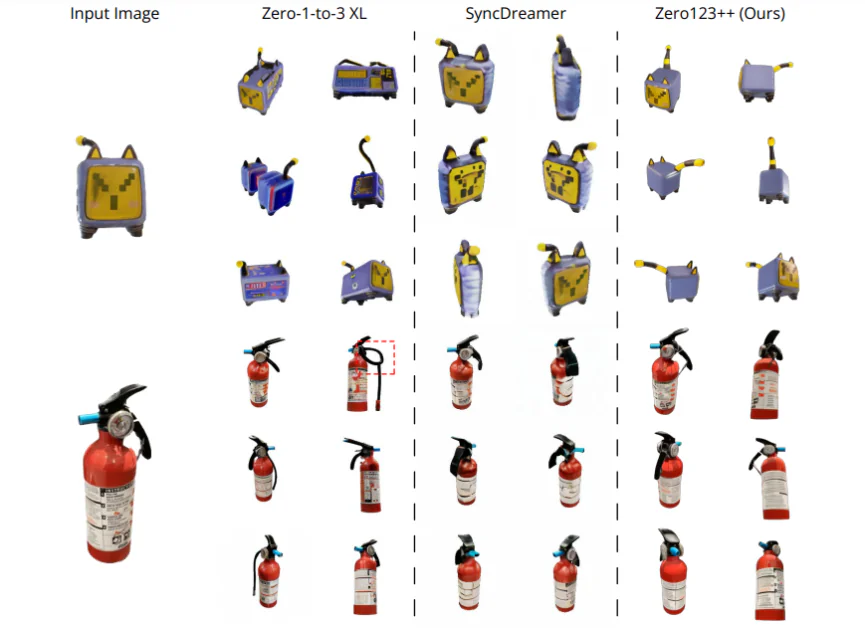

To assess the efficiency of the Zero123++ framework on the premise of its high quality generated, it’s in contrast in opposition to SyncDreamer, and Zero-1-to-3- XL, two of the best state-of-the-art frameworks for content material era. The frameworks are in contrast in opposition to 4 enter photographs with completely different scope. The first picture is an electrical toy cat, taken instantly from the Objaverse dataset, and it boasts of a giant uncertainty on the rear finish of the item. Second is the picture of a fireplace extinguisher, and the third one is the picture of a canine sitting on a rocket, generated by the SDXL mannequin. The closing picture is an anime illustration. The required elevation steps for the frameworks are achieved through the use of the One-2-3-4-5 framework’s elevation estimation technique, and background removing is achieved utilizing the SAM framework. As it may be seen, the Zero123++ framework generates prime quality multi-view photographs constantly, and is able to generalizing to out-of-domain 2D illustration, and AI-generated photographs equally properly.

Quantitative Analysis

To quantitatively examine the Zero123++ framework in opposition to state-of-the-art Zero-1-to-3 and Zero-1to-3 XL frameworks, builders consider the Learned Perceptual Image Patch Similarity (LPIPS) rating of those fashions on the validation break up knowledge, a subset of the Objaverse dataset. To consider the mannequin’s efficiency on multi-view picture era, the builders tile the bottom fact reference photographs, and 6 generated photographs respectively, after which compute the Learned Perceptual Image Patch Similarity (LPIPS) rating. The outcomes are demonstrated under and as it may be clearly seen, the Zero123++ framework achieves the most effective efficiency on the validation break up set.

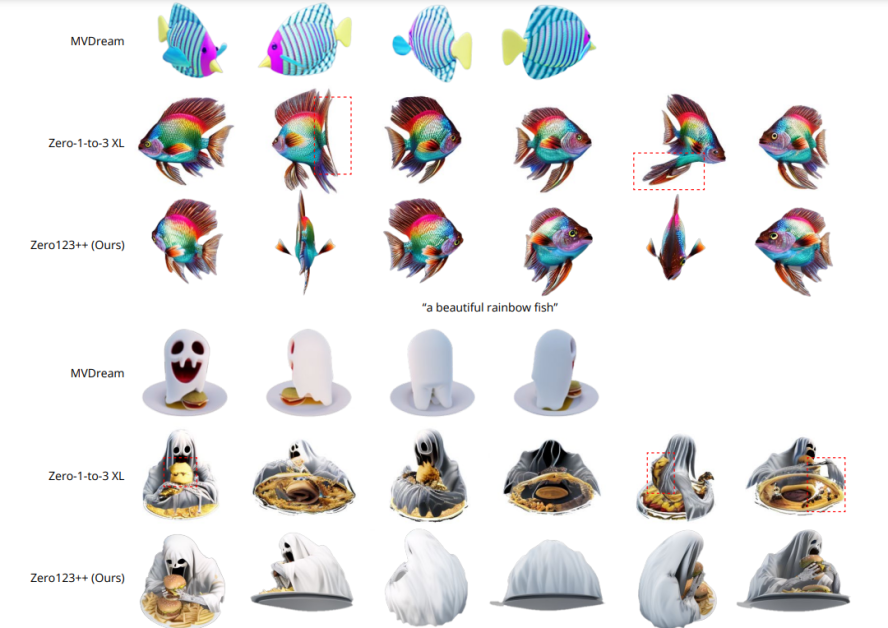

Text to Multi-View Evaluation

To consider Zero123++ framework’s capability in Text to Multi-View content material era, builders first use the SDXL framework with textual content prompts to generate a picture, after which make use of the Zero123++ framework to the picture generated. The outcomes are demonstrated within the following picture, and as it may be seen, when in comparison with the Zero-1-to-3 framework that can’t assure constant multi-view era, the Zero123++ framework returns constant, reasonable, and extremely detailed multi-view photographs by implementing the text-to-image-to-multi-view strategy or pipeline.

Zero123++ Depth ControlNet

In addition to the bottom Zero123++ framework, builders have additionally launched the Depth ControlNet Zero123++, a depth-controlled model of the unique framework constructed utilizing the ControlNet structure. The normalized linear photographs are rendered in respect with the following RGB photographs, and a ControlNet framework is educated to regulate the geometry of the Zero123++ framework utilizing depth notion.

Conclusion

In this text, now we have talked about Zero123++, an image-conditioned diffusion generative AI mannequin with the purpose to generate 3D-consistent multiple-view photographs utilizing a single view enter. To maximize the benefit gained from prior pretrained generative fashions, the Zero123++ framework implements quite a few coaching and conditioning schemes to reduce the quantity of effort it takes to finetune from off-the-shelf diffusion picture fashions. We have additionally mentioned the completely different approaches and enhancements applied by the Zero123++ framework that helps it obtain outcomes similar to, and even exceeding these achieved by present state-of-the-art frameworks.

However, regardless of its effectivity, and talent to generate high-quality multi-view photographs constantly, the Zero123++ framework nonetheless has some room for enchancment, with potential areas of analysis being a

- Two-Stage Refiner Model that may resolve Zero123++’s lack of ability to fulfill international necessities for consistency.

- Additional Scale-Ups to additional improve Zero123++’s capability to generate photographs of even increased high quality.