When performing exterior penetration testing or bug bounty looking, safety specialists discover the focused system from varied angles to gather as a lot data as potential and establish potential assault vectors. This entails figuring out all of the out there property, domains, and subdomains.

During the testing reconnaissance section, testers spend time on digital host enumeration, which is the method of discovering all of the digital hosts related to a selected IP deal with or area. This helps them discover hidden or undocumented property that is likely to be weak or misconfigured.

For instance, they may discover a digital host that may be accessed with out authentication. This might end in unauthorized entry to delicate information.

In this text, we’ll focus on other ways to enumerate digital hosts and collect data from them. We will use the HTB Academy train within the “Information Gathering — Web Edition” module to display the enumeration steps.

Table of Contents

Pre-requisites

Before we begin enumerating digital hosts, we have to set up some instruments to assist us. Most of those instruments run on Linux, akin to Ubuntu and Kali Linux:

- Ffuf

- Gobuster

- Eyewitness

- hakcheckurl

If you do not have these put in, I’ll cowl the steps under.

Virtual Hosting Overview

Virtual internet hosting is a function that permits a single net server to host a number of web sites and have them seem as if they’re hosted on separate, particular person servers. This is normally accomplished to cut back useful resource overhead and operating prices.

There are two sorts of digital internet hosting: IP-based and Name-based.

IP-based Hosting

This sort of internet hosting entails configuring an online server to host a number of web sites on a single server. Each hosted web site is related to a singular IP deal with, which may both be devoted or shared based mostly on the internet hosting configuration.

When a person tries to entry an internet site, the server listens for the request, resolves the incoming hostname to its corresponding IP deal with, after which routes the request to the suitable web site based mostly on that IP deal with.

Once the server identifies the supposed web site based mostly on that IP deal with, it serves the content material related to that web site to the person.

Name-based Hosting

This sort of internet hosting entails configuring an online server to host a number of web sites on a single IP deal with utilizing completely different domains. Each hosted web site is usually related to a singular hostname, however a number of hostnames will be associated to a single web site.

When a person requests to entry an internet site, the server checks the “Host” header within the HTTP request to determine which web site the person is attempting to achieve. Based on the hostname supplied within the Host header, the server identifies the particular web site and serves the content material related to that web site to the person.

Virtual Hosts Enumeration

Ffuf

Ffuf is a software written in Go that may be put in on Kali Linux by operating sudo apt-get set up ffuf or downloaded from GitHub. This software permits you to customise your fuzzing approaches.

To begin trying to find digital hosts, we have to go the IP deal with of the goal utilizing the -u flag and the related area identify with the -H flag, which refers back to the Host header.

Then, place the phrase FUZZ firstly of the area to point the fuzzing place.

We can use completely different wordlists to establish digital hosts with the -w flag. One well-liked wordlist is the namelist checklist within the Seclists wordlists, whereas one other is the Kiterunner wordlist in Assetnotes.

ffuf -w namelist.txt -u http://10.129.184.109 -H "HOST: FUZZ.inlanefreight.htb".Fuzzing can generate quite a few outcomes that generally are exhausting to establish as legitimate or invalid. Filtering down the outcomes can prevent time sifting by way of the output.

You can filter one response dimension or an inventory of sizes utilizing commas to separate them with the -fs flag — like -fs 109, 208,, and so forth.

fuf -w namelist.txt -u http://10.129.184.109 -H "HOST: FUZZ.inlanefreight.htb" -fs 10918

After the fuzzing is full, we save the output to a file. Then, we are able to use the grep utility to look the end result for traces that include the phrase “FUZZ” within the textual content. Below is an instance of utilizing grep to search out the traces with the recognized subdomains.

cat vhosts | grep

FUZZFUZZ:ap

FUZZ:app

FUZZ:citrixThen, we are able to pipe the grep output with the awk utility to extract solely the recognized subdomains utilizing the print command, adopted by a greenback signal and the column quantity. This complete command will be written in a single line.

cat vhosts | grep FUZZ | awk '{print $3}'Using a brief bash script, we append our authentic area identify to the recognized subdomains, as seen in Figure 02.

for i in $(cat vhost1); do echo $i.inlanefreight.htb ; accomplished > vhost1

Gobuster

Another solution to enumerate digital hosts is with the Gobuster software utilizing the vhost possibility. The software will be put in in Kali by operating sudo apt-get set up gobuster or downloaded from GitHub.

To start the enumeration course of, we first want to supply the IP deal with utilizing the -u flag and specify a wordlist with the -w flag. After that, we outline the area identify and the place the place the fuzzing begins.

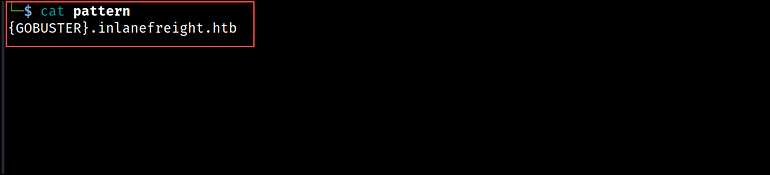

In Gobuster, we outline this data in a textual content file, referred to as a sample file, that will get handed with the -p flag. You can see an instance of a sample file in Figure 03 under.

{GOBUSTER}.inlanefreight.htb

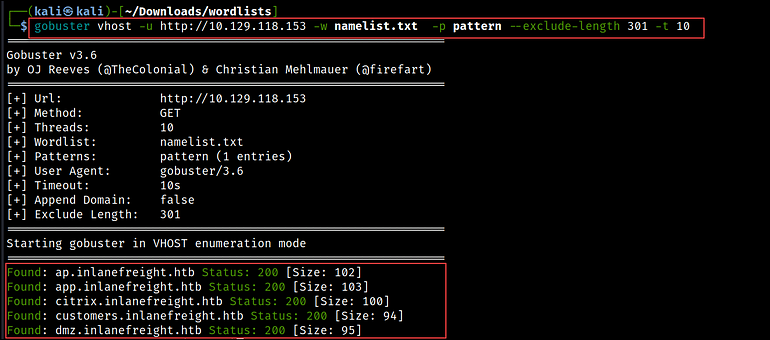

For filtering the output, we use the --exclude-length flag to sift by way of the response sizes. Multiple response sizes will be separated by commas.

gobuster vhost -u http://10.129.118.153 -w namelist.txt -p sample --exclude-length 301 -t 10

Curl

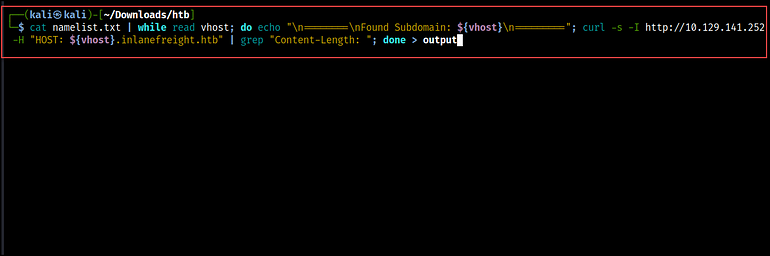

We can obtain the identical factor with Curl and a few bash scripting. The script under reads the content material of the namelist file, which serves as our wordlist, and prints the message “Found Subdomain” for every subdomain it reads from the file.

cat namelist.txt | whereas learn vhost; do echo "n========nFound Subdomain: ${vhost}n=========";Then, the curl command makes HTTP HEAD requests to the required IP deal with (http://10.129.141.252), passing the subdomains from the wordlist within the Host header.

The output is piped to grep to extract the Content-length of the responses and reserve it in a file.

curl -s -I http://10.129.141.252 -H "HOST: ${vhost}.inlanefreight.htb" | grep "Content-Length: "; accomplished > output

To search the output, we make the most of the grep command once more and filter for the traces that include the textual content “Content-Length:”. Then, we use the uniq command to take away any duplicate traces in a textual content file, and the -c flag to rely the variety of occasions every distinctive line happens.

cat output | grep "Content-Length:" | uniq -c

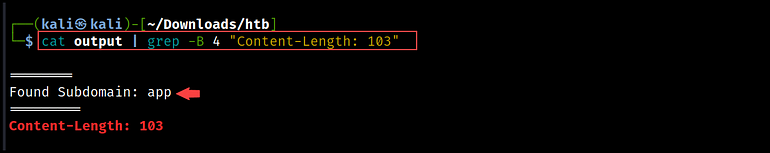

If we wish to extract subdomains from the content material, we are able to use the -B flag to show just a few traces earlier than the match. In this command, we used 4 traces to retrieve the subdomain names.

cat output | grep -B 4 "Content-Length: 103"

Post Enumeration

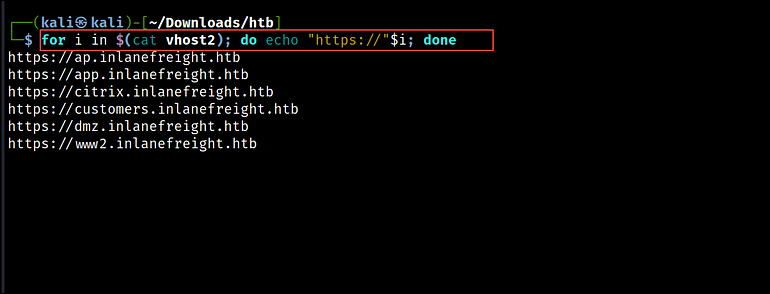

After figuring out the digital hosts, we append HTTP or HTTPS to generate an inventory of URLs. We can use a one-liner bash script to try this.

for i in $(cat vhost2); do echo "https://"$i; accomplished > vhosts3

This checklist can then be used with different instruments like hakcheckurl or Eyewitness to retrieve the HTTP response codes to test for out there net pages and seize screenshots.

hakcheckurl

hakcheckurl is a software written in Go by hakluke and is out there on GitHub right here. The software takes an inventory of URLs and returns their corresponding HTTP response codes.

To run the software, you will have to have Go put in. Follow the steps on Go’s official web site for putting in it on a Linux surroundings.

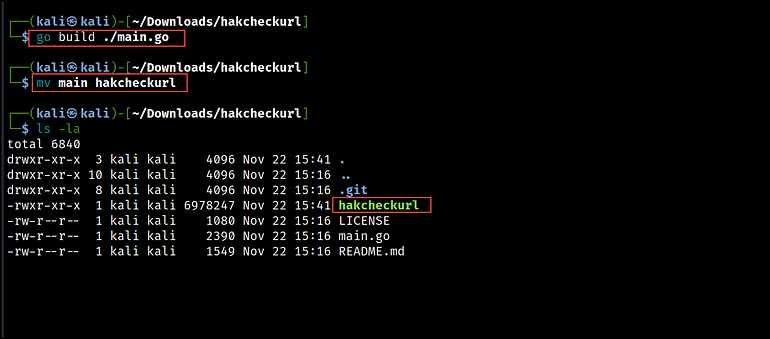

After set up, clone the hakcheckurl repository, construct the software with go construct, and rename it to hakcheckurl.

git clone https://github.com/hakluke/hakcheckurl.git

go construct ./major.go

# rename the software to hakcheckurl as an alternative of major

mv major hakcheckurl

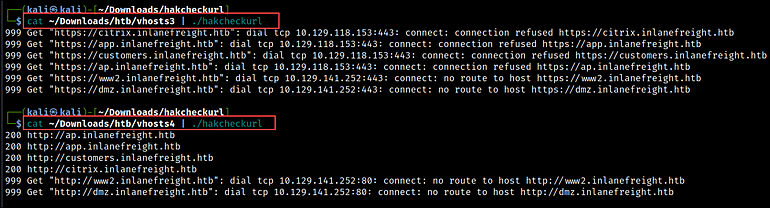

Next, we use the hakcheckurl software to find out the HTTP response codes for every URL. In the under outcomes, you’ll be able to see that the URLs that used the HTTPS protocol had been unreachable, whereas those who used the HTTP protocol returned 200 response codes. This signifies that the net pages utilizing HTTP are up and operating.

cat vhosts | ./hakcheckurl

Eyewitness

Once now we have recognized the net pages that we wish to examine, we are able to use Eyewitness to assemble extra details about the underlying infrastructure and the applied sciences related to the focused web sites.

Eyewitness is a software created by RedSiege that may seize screenshots, retrieve header data, and establish default credentials, if any are recognized. We can set up it on Kali with sudo apt-get set up eyewitness or obtain it from GitHub.

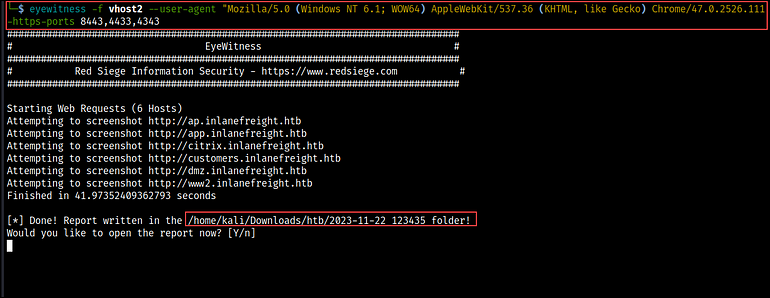

To run Eyewitness, we have to go the checklist of URLs utilizing the -f flag. Then, we are able to set a customized User-Agent string for the HTTP requests with the --user-agent flag. This will be helpful for simulating requests from completely different browsers or shopper functions.

We may also, specify extra ports to test with the http and https protocol utilizing the --add-http-ports and --add-https-ports flag. This instructs Eyewitness to connect with these ports and seize screenshots, if relevant.

eyewitness -f vhost2 --user-agent "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebPackage/537.36 (KHTML, like Gecko) Chrome/47.0.2526.111 Safari/537.36" --add-http-ports 8080,8000,8088 --add-https-ports 8443,4433,4343

After it runs, we get prompted to decide on whether or not or to not open the report that has been created. If you choose ‘Y’, the default net browser will open the report. If you select ‘N’, the report shall be saved to your native system.

Wrapping Up

With that now we have reached the tip of immediately’s tutorial. Throughout the article, you might have found and explored varied instruments to enumerate digital hosts. We additionally mentioned the right way to use the outcomes from these instruments to broaden the assault floor and achieve precious insights into the goal’s infrastructure.

Thank you for taking the time to learn this publish. I additionally created a cheatsheet for you on Notion that lists all of the instructions we used on this publish.