The generative AI revolution embodied in instruments like ChatGPT, Midjourney, and lots of others is at its core based mostly on a easy components: Take a really massive neural community, prepare it on an enormous dataset scraped from the Web, after which use it to satisfy a broad vary of person requests. Large language fashions (LLMs) can reply questions, write code, and spout poetry, whereas image-generating programs can create convincing cave work or up to date artwork.

So why haven’t these wonderful AI capabilities translated into the sorts of useful and broadly helpful robots we’ve seen in science fiction? Where are the robots that may clear off the desk, fold your laundry, and make you breakfast?

Unfortunately, the extremely profitable generative AI components—large fashions skilled on a number of Internet-sourced information—doesn’t simply carry over into robotics, as a result of the Internet is just not stuffed with robotic-interaction information in the identical method that it’s stuffed with textual content and pictures. Robots want robotic information to study from, and this information is often created slowly and tediously by researchers in laboratory environments for very particular duties. Despite great progress on robot-learning algorithms, with out plentiful information we nonetheless can’t allow robots to carry out real-world duties (like making breakfast) exterior the lab. The most spectacular outcomes sometimes solely work in a single laboratory, on a single robotic, and infrequently contain solely a handful of behaviors.

If the skills of every robotic are restricted by the effort and time it takes to manually train it to carry out a brand new process, what if we had been to pool collectively the experiences of many robots, so a brand new robotic may study from all of them without delay? We determined to present it a attempt. In 2023, our labs at Google and the University of California, Berkeley got here along with 32 different robotics laboratories in North America, Europe, and Asia to undertake the

RT-X venture, with the aim of assembling information, assets, and code to make general-purpose robots a actuality.

Here is what we discovered from the primary part of this effort.

How to create a generalist robotic

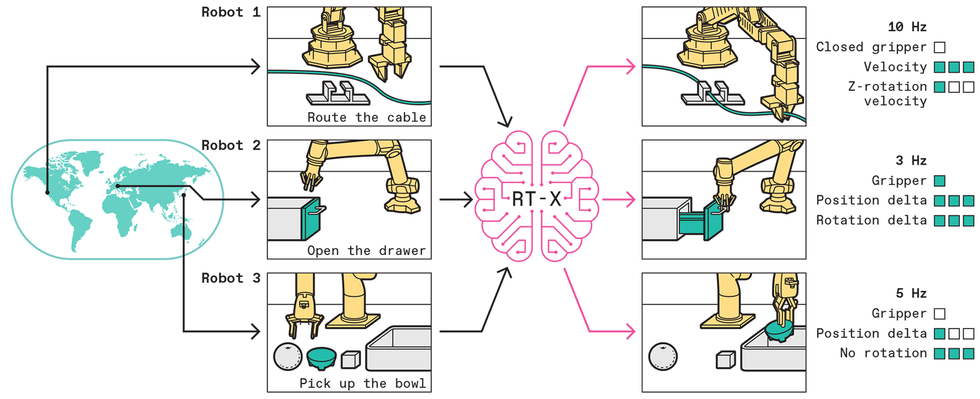

Humans are much better at this type of studying. Our brains can, with a bit apply, deal with what are primarily modifications to our physique plan, which occurs once we decide up a software, journey a bicycle, or get in a automobile. That is, our “embodiment” modifications, however our brains adapt. RT-X is aiming for one thing related in robots: to allow a single deep neural community to manage many alternative kinds of robots, a functionality known as cross-embodiment. The query is whether or not a deep neural community skilled on information from a sufficiently massive variety of totally different robots can study to “drive” all of them—even robots with very totally different appearances, bodily properties, and capabilities. If so, this method may doubtlessly unlock the facility of huge datasets for robotic studying.

The scale of this venture could be very massive as a result of it must be. The RT-X dataset presently incorporates almost 1,000,000 robotic trials for 22 kinds of robots, together with most of the mostly used robotic arms available on the market. The robots on this dataset carry out an enormous vary of behaviors, together with choosing and inserting objects, meeting, and specialised duties like cable routing. In complete, there are about 500 totally different abilities and interactions with 1000’s of various objects. It’s the biggest open-source dataset of actual robotic actions in existence.

Surprisingly, we discovered that our multirobot information could possibly be used with comparatively easy machine-learning strategies, offered that we comply with the recipe of utilizing massive neural-network fashions with massive datasets. Leveraging the identical sorts of fashions utilized in present LLMs like ChatGPT, we had been in a position to prepare robot-control algorithms that don’t require any particular options for cross-embodiment. Much like an individual can drive a automobile or journey a bicycle utilizing the identical mind, a mannequin skilled on the RT-X dataset can merely acknowledge what sort of robotic it’s controlling from what it sees within the robotic’s personal digital camera observations. If the robotic’s digital camera sees a

UR10 industrial arm, the mannequin sends instructions acceptable to a UR10. If the mannequin as an alternative sees a low-cost WidowX hobbyist arm, the mannequin strikes it accordingly.

To take a look at the capabilities of our mannequin, 5 of the laboratories concerned within the RT-X collaboration every examined it in a head-to-head comparability towards the very best management system that they had developed independently for their very own robotic. Each lab’s take a look at concerned the duties it was utilizing for its personal analysis, which included issues like choosing up and shifting objects, opening doorways, and routing cables by clips. Remarkably, the one unified mannequin offered improved efficiency over every laboratory’s personal finest technique, succeeding on the duties about 50 p.c extra usually on common.

While this end result may appear shocking, we discovered that the RT-X controller may leverage the various experiences of different robots to enhance robustness in several settings. Even inside the identical laboratory, each time a robotic makes an attempt a process, it finds itself in a barely totally different scenario, and so drawing on the experiences of different robots in different conditions helped the RT-X controller with pure variability and edge instances. Here are just a few examples of the vary of those duties:

Building robots that may cause

Encouraged by our success with combining information from many robotic sorts, we subsequent sought to research how such information may be integrated right into a system with extra in-depth reasoning capabilities. Complex semantic reasoning is difficult to study from robotic information alone. While the robotic information can present a variety of

bodily capabilities, extra complicated duties like “Move apple between can and orange” additionally require understanding the semantic relationships between objects in a picture, fundamental frequent sense, and different symbolic data that isn’t instantly associated to the robotic’s bodily capabilities.

So we determined so as to add one other large supply of information to the combo: Internet-scale picture and textual content information. We used an current massive vision-language mannequin that’s already proficient at many duties that require some understanding of the connection between pure language and pictures. The mannequin is just like those out there to the general public resembling ChatGPT or

Bard. These fashions are skilled to output textual content in response to prompts containing photographs, permitting them to resolve issues resembling visible question-answering, captioning, and different open-ended visible understanding duties. We found that such fashions may be tailored to robotic management just by coaching them to additionally output robotic actions in response to prompts framed as robotic instructions (resembling “Put the banana on the plate”). We utilized this method to the robotics information from the RT-X collaboration.

The RT-X mannequin makes use of photographs or textual content descriptions of particular robotic arms doing totally different duties to output a collection of discrete actions that may permit any robotic arm to do these duties. By accumulating information from many robots doing many duties from robotics labs around the globe, we’re constructing an open-source dataset that can be utilized to show robots to be typically helpful.Chris Philpot

The RT-X mannequin makes use of photographs or textual content descriptions of particular robotic arms doing totally different duties to output a collection of discrete actions that may permit any robotic arm to do these duties. By accumulating information from many robots doing many duties from robotics labs around the globe, we’re constructing an open-source dataset that can be utilized to show robots to be typically helpful.Chris Philpot

To consider the mix of Internet-acquired smarts and multirobot information, we examined our RT-X mannequin with Google’s cell manipulator robotic. We gave it our hardest generalization benchmark assessments. The robotic needed to acknowledge objects and efficiently manipulate them, and it additionally had to answer complicated textual content instructions by making logical inferences that required integrating data from each textual content and pictures. The latter is likely one of the issues that make people such good generalists. Could we give our robots not less than a touch of such capabilities?

Even with out particular coaching, this Google analysis robotic is ready to comply with the instruction “transfer apple between can and orange.” This functionality is enabled by RT-X, a big robotic manipulation dataset and step one in direction of a basic robotic mind.

We performed two units of evaluations. As a baseline, we used a mannequin that excluded the entire generalized multirobot RT-X information that didn’t contain Google’s robotic. Google’s robot-specific dataset is in actual fact the biggest a part of the RT-X dataset, with over 100,000 demonstrations, so the query of whether or not all the opposite multirobot information would truly assist on this case was very a lot open. Then we tried once more with all that multirobot information included.

In one of the vital troublesome analysis eventualities, the Google robotic wanted to perform a process that concerned reasoning about spatial relations (“Move apple between can and orange”); in one other process it needed to resolve rudimentary math issues (“Place an object on high of a paper with the answer to ‘2+3’”). These challenges had been meant to check the essential capabilities of reasoning and drawing conclusions.

In this case, the reasoning capabilities (such because the that means of “between” and “on high of”) got here from the Web-scale information included within the coaching of the vision-language mannequin, whereas the flexibility to floor the reasoning outputs in robotic behaviors—instructions that really moved the robotic arm in the suitable path—got here from coaching on cross-embodiment robotic information from RT-X. Some examples of evaluations the place we requested the robots to carry out duties not included of their coaching information are proven under.While these duties are rudimentary for people, they current a serious problem for general-purpose robots. Without robotic demonstration information that clearly illustrates ideas like “between,” “close to,” and “on high of,” even a system skilled on information from many alternative robots wouldn’t be capable to determine what these instructions imply. By integrating Web-scale data from the vision-language mannequin, our full system was in a position to resolve such duties, deriving the semantic ideas (on this case, spatial relations) from Internet-scale coaching, and the bodily behaviors (choosing up and shifting objects) from multirobot RT-X information. To our shock, we discovered that the inclusion of the multirobot information improved the Google robotic’s capacity to generalize to such duties by an element of three. This end result means that not solely was the multirobot RT-X information helpful for buying a wide range of bodily abilities, it may additionally assist to raised join such abilities to the semantic and symbolic data in vision-language fashions. These connections give the robotic a level of frequent sense, which may sooner or later allow robots to grasp the that means of complicated and nuanced person instructions like “Bring me my breakfast” whereas finishing up the actions to make it occur.

The subsequent steps for RT-X

The RT-X venture reveals what is feasible when the robot-learning group acts collectively. Because of this cross-institutional effort, we had been in a position to put collectively a various robotic dataset and perform complete multirobot evaluations that wouldn’t be doable at any single establishment. Since the robotics group can’t depend on scraping the Internet for coaching information, we have to create that information ourselves. We hope that extra researchers will contribute their information to the

RT-X database and be part of this collaborative effort. We additionally hope to offer instruments, fashions, and infrastructure to assist cross-embodiment analysis. We plan to transcend sharing information throughout labs, and we hope that RT-X will develop right into a collaborative effort to develop information requirements, reusable fashions, and new methods and algorithms.

Our early outcomes trace at how massive cross-embodiment robotics fashions may rework the sector. Much as massive language fashions have mastered a variety of language-based duties, sooner or later we’d use the identical basis mannequin as the premise for a lot of real-world robotic duties. Perhaps new robotic abilities could possibly be enabled by fine-tuning and even prompting a pretrained basis mannequin. In an identical approach to how one can immediate ChatGPT to inform a narrative with out first coaching it on that specific story, you may ask a robotic to write down “Happy Birthday” on a cake with out having to inform it find out how to use a piping bag or what handwritten textual content appears like. Of course, far more analysis is required for these fashions to tackle that form of basic functionality, as our experiments have targeted on single arms with two-finger grippers doing easy manipulation duties.

As extra labs have interaction in cross-embodiment analysis, we hope to additional push the frontier on what is feasible with a single neural community that may management many robots. These advances would possibly embody including numerous simulated information from generated environments, dealing with robots with totally different numbers of arms or fingers, utilizing totally different sensor suites (resembling depth cameras and tactile sensing), and even combining manipulation and locomotion behaviors. RT-X has opened the door for such work, however essentially the most thrilling technical developments are nonetheless forward.

This is only the start. We hope that with this primary step, we will collectively create the way forward for robotics: the place basic robotic brains can energy any robotic, benefiting from information shared by all robots around the globe.

From Your Site Articles

Related Articles Around the Web