Large Language Models (LLMs) have not too long ago taken middle stage, due to standout performers like ChatGPT. When Meta launched their Llama fashions, it sparked a renewed curiosity in open-source LLMs. The purpose? To create reasonably priced, open-source LLMs which are pretty much as good as top-tier fashions similar to GPT-4, however with out the hefty price ticket or complexity.

This mixture of affordability and effectivity not solely opened up new avenues for researchers and builders but additionally set the stage for a brand new period of technological developments in pure language processing.

Recently, generative AI startups have been on a roll with funding. Together raised $20 million, aiming to form open-source AI. Anthropic additionally raised a formidable $450 million, and Cohere, partnering with Google Cloud, secured $270 million in June this yr.

Introduction to Mistral 7B: Size & Availability

Mistral AI, primarily based in Paris and co-founded by alums from Google’s DeepMind and Meta, introduced its first massive language mannequin: Mistral 7B. This mannequin will be simply downloaded by anybody from GitHub and even through a 13.4-gigabyte torrent.

This startup managed to safe record-breaking seed funding even earlier than they’d a product out. Mistral AI first mode with 7 billion parameter mannequin surpasses the efficiency of Llama 2 13B in all checks and beats Llama 1 34B in lots of metrics.

Compared to different fashions like Llama 2, Mistral 7B supplies related or higher capabilities however with much less computational overhead. While foundational fashions like GPT-4 can obtain extra, they arrive at the next price and are not as user-friendly since they’re primarily accessible by APIs.

When it involves coding duties, Mistral 7B offers CodeLlama 7B a run for its cash. Plus, it is compact sufficient at 13.4 GB to run on normal machines.

Additionally, Mistral 7B Instruct, tuned particularly for educational datasets on Hugging Face, has proven nice efficiency. It outperforms different 7B fashions on MT-Bench and stands shoulder to shoulder with 13B chat fashions.

Hugging Face Mistral 7B Example

Performance Benchmarking

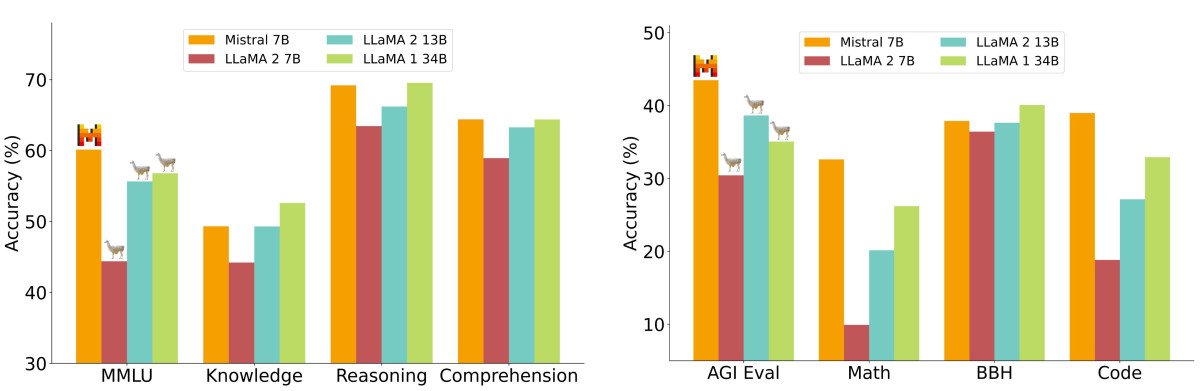

In an in depth efficiency evaluation, Mistral 7B was measured towards the Llama 2 household fashions. The outcomes had been clear: Mistral 7B considerably surpassed the Llama 2 13B throughout all benchmarks. In truth, it matched the efficiency of Llama 34B, particularly standing out in code and reasoning benchmarks.

The benchmarks had been organized into a number of classes, similar to Commonsense Reasoning, World Knowledge, Reading Comprehension, Math, and Code, amongst others. A very noteworthy commentary was Mistral 7B’s cost-performance metric, termed “equal mannequin sizes”. In areas like reasoning and comprehension, Mistral 7B demonstrated efficiency akin to a Llama 2 mannequin thrice its dimension, signifying potential financial savings in reminiscence and an uptick in throughput. However, in information benchmarks, Mistral 7B aligned intently with Llama 2 13B, which is probably going attributed to its parameter limitations affecting information compression.

What actually makes Mistral 7B mannequin higher than most different Language Models?

Simplifying Attention Mechanisms

While the subtleties of consideration mechanisms are technical, their foundational thought is comparatively easy. Imagine studying a guide and highlighting vital sentences; that is analogous to how consideration mechanisms “spotlight” or give significance to particular knowledge factors in a sequence.

In the context of language fashions, these mechanisms allow the mannequin to give attention to essentially the most related elements of the enter knowledge, making certain the output is coherent and contextually correct.

In normal transformers, consideration scores are calculated with the method:

The method for these scores entails a vital step – the matrix multiplication of Q and Ok. The problem right here is that because the sequence size grows, each matrices increase accordingly, resulting in a computationally intensive course of. This scalability concern is among the main explanation why normal transformers will be sluggish, particularly when coping with lengthy sequences.

Attention mechanisms assist fashions give attention to particular elements of the enter knowledge. Typically, these mechanisms use ‘heads’ to handle this consideration. The extra heads you may have, the extra particular the eye, nevertheless it additionally turns into extra advanced and slower. Dive deeper into of transformers and a focus mechanisms right here.

Attention mechanisms assist fashions give attention to particular elements of the enter knowledge. Typically, these mechanisms use ‘heads’ to handle this consideration. The extra heads you may have, the extra particular the eye, nevertheless it additionally turns into extra advanced and slower. Dive deeper into of transformers and a focus mechanisms right here.

Multi-query consideration (MQA) speeds issues up by utilizing one set of ‘key-value’ heads however typically sacrifices high quality. Now, you would possibly surprise, why not mix the velocity of MQA with the standard of multi-head consideration? That’s the place Grouped-query consideration (GQA) is available in.

Grouped-query Attention (GQA)

GQA is a middle-ground answer. Instead of utilizing only one or a number of ‘key-value’ heads, it teams them. This manner, GQA achieves a efficiency near the detailed multi-head consideration however with the velocity of MQA. For fashions like Mistral, this implies environment friendly efficiency with out compromising an excessive amount of on high quality.

Sliding Window Attention (SWA)

The sliding window is one other methodology use in processing consideration sequences. This methodology makes use of a fixed-sized consideration window round every token within the sequence. With a number of layers stacking this windowed consideration, the highest layers ultimately achieve a broader perspective, encompassing data from your entire enter. This mechanism is analogous to the receptive fields seen in Convolutional Neural Networks (CNNs).

On the opposite hand, the “dilated sliding window consideration” of the Longformer mannequin, which is conceptually much like the sliding window methodology, computes just some diagonals of the matrix. This change leads to reminiscence utilization growing linearly relatively than quadratically, making it a extra environment friendly methodology for longer sequences.

Mistral AI’s Transparency vs. Safety Concerns in Decentralization

In their announcement, Mistral AI additionally emphasised transparency with the assertion: “No tips, no proprietary knowledge.” But on the identical time their solely obtainable mannequin in the mean time ‘Mistral-7B-v0.1′ is a pretrained base mannequin subsequently it could possibly generate a response to any question with out moderation, which raises potential security considerations. While fashions like GPT and Llama have mechanisms to discern when to reply, Mistral’s absolutely decentralized nature may very well be exploited by dangerous actors.

However, the decentralization of Large Language Models has its deserves. While some would possibly misuse it, folks can harness its energy for societal good and making intelligence accessible to all.

Deployment Flexibility

One of the highlights is that Mistral 7B is on the market below the Apache 2.0 license. This means there are no actual obstacles to utilizing it – whether or not you are utilizing it for private functions, an enormous company, or perhaps a governmental entity. You simply want the best system to run it, otherwise you may need to put money into cloud sources.

While there are different licenses such because the easier MIT License and the cooperative CC BY-SA-4.0, which mandates credit score and related licensing for derivatives, Apache 2.0 supplies a sturdy basis for large-scale endeavors.

Final Thoughts

The rise of open-source Large Language Models like Mistral 7B signifies a pivotal shift within the AI trade, making high-quality language fashions accessible to a wider viewers. Mistral AI’s progressive approaches, similar to Grouped-query consideration and Sliding Window Attention, promise environment friendly efficiency with out compromising high quality.

While the decentralized nature of Mistral poses sure challenges, its flexibility and open-source licensing underscore the potential for democratizing AI. As the panorama evolves, the main focus will inevitably be on balancing the ability of those fashions with moral concerns and security mechanisms.

Up subsequent for Mistral? The 7B mannequin was just the start. The workforce goals to launch even greater fashions quickly. If these new fashions match the 7B’s efficiency, Mistral would possibly rapidly rise as a prime participant within the trade, all inside their first yr.