We are seeing a development of Generative AI functions powered by giant language fashions (LLM) from prompts to retrieval augmented era (RAG) to brokers. Agents are being talked about closely in trade and analysis circles, primarily for the ability this know-how gives to rework Enterprise functions and supply superior buyer experiences. There are widespread patterns for constructing brokers that allow first steps in the direction of synthetic common intelligence (AGI).

In my earlier article, we noticed a ladder of intelligence of patterns for constructing LLM powered functions. Starting with prompts that seize drawback area and use LLM inside reminiscence to generate output. With RAG, we increase the immediate with exterior information searched from a vector database to regulate the outputs. Next by chaining LLM calls we are able to construct workflows to appreciate complicated functions. Agents take this to a subsequent degree by auto figuring out how these LLM chains are to be fashioned. Let’s look intimately.

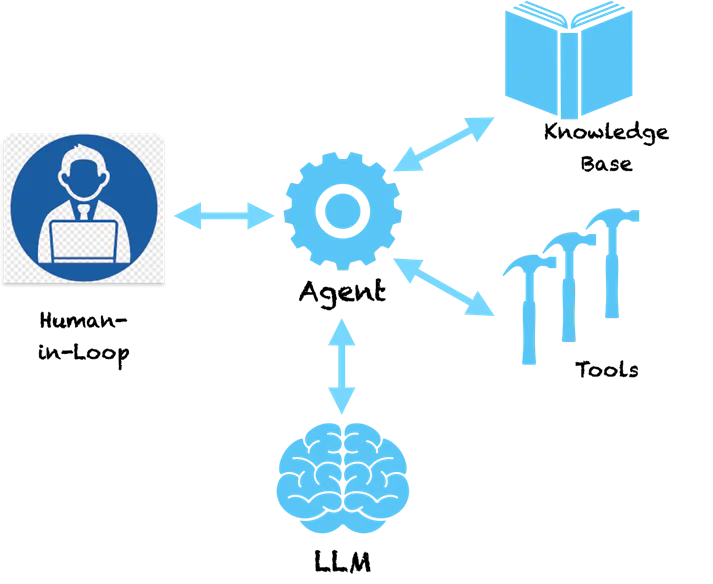

Agents – Under the hood

A key sample with brokers is that they use the language understanding energy of LLM to make a plan on methods to clear up a given drawback. The LLM understands the issue and offers us a sequence of steps to resolve the issue. However, it does not cease there. Agents usually are not a pure help system that may present you suggestions on fixing the issue after which cross on the baton to you to take the advisable steps. Agents are empowered with tooling to go forward and take the motion. Scary proper!?

If we ask an agent a primary query like this:

Human: Which firm did the inventor of the phone begin?

Following is a pattern of pondering steps that an agent might take.

Agent (THINKING):

- Thought: I have to seek for the inventor of the phone.

- Action: Search [inventor of telephone]

- Observation: Alexander Graham Bell

- Thought: I want to go looking for an organization that was based by Alexander Graham Bell

- Action: Search [company founded by Alexander Graham Bell]

- Observation: Alexander Graham Bell co-founded the American Telephone and Telegraph Company (AT&T) in 1885

- Thought: I’ve discovered the reply. I’ll return.

Agent (RESPONSE): Alexander Graham Bell co-founded AT&T in 1885

You can see that the agent follows a methodical method of breaking down the issue into subproblems that may be solved by taking particular Actions. The actions listed here are advisable by the LLM and we are able to map these to particular instruments to implement these actions. We might allow a search device for the agent such that when it realizes that LLM has supplied search as an motion, it’s going to name this device with the parameters supplied by the LLM. The search right here is on the web however can as properly be redirected to go looking an inside information base like a vector database. The system now turns into self-sufficient and may determine methods to clear up complicated issues following a collection of steps. Frameworks like LangChain and LLaMAIndex provide you with a simple solution to construct these brokers and hook up with toolings and API. Amazon not too long ago launched their Bedrock Agents framework that gives a visible interface for designing brokers.

Under the hood, brokers comply with a particular fashion of sending prompts to the LLM which make them generate an motion plan. The above Thought-Action-Observation sample is standard in a kind of agent referred to as ReAct (Reasoning and Acting). Other varieties of brokers embrace MRKL and Plan & Execute, which primarily differ of their prompting fashion.

For extra complicated brokers, the actions could also be tied to instruments that trigger modifications in supply methods. For instance, we might join the agent to a device that checks for trip steadiness and applies for go away in an ERP system for an worker. Now we might construct a pleasant chatbot that may work together with customers and by way of a chat command apply for go away within the system. No extra complicated screens for making use of for leaves, a easy unified chat interface. Sounds thrilling!?

Caveats and want for Responsible AI

Now what if we have now a device that invokes transactions on inventory buying and selling utilizing a pre-authorized API. You construct an utility the place the agent research inventory modifications (utilizing instruments) and makes selections for you on shopping for and promoting of inventory. What if the agent sells the flawed inventory as a result of it hallucinated and made a flawed choice? Since LLM are large fashions, it’s troublesome to pinpoint why they make some selections, therefore hallucinations are widespread in absence of correct guardrails.

While brokers are all fascinating you in all probability would have guessed how harmful they are often. If they hallucinate and take a flawed motion that might trigger large monetary losses or main points in Enterprise methods. Hence Responsible AI is changing into of utmost significance within the age of LLM powered functions. The ideas of Responsible AI round reproducibility, transparency, and accountability, attempt to put guardrails on selections taken by brokers and recommend threat evaluation to resolve which actions want a human-in-the-loop. As extra complicated brokers are being designed, they want extra scrutiny, transparency, and accountability to ensure we all know what they’re doing.

Closing ideas

Ability of brokers to generate a path of logical steps with actions will get them actually near human reasoning. Empowering them with extra highly effective instruments may give them superpowers. Patterns like ReAct attempt to emulate how people clear up the issue and we are going to see higher agent patterns that might be related to particular contexts and domains (banking, insurance coverage, healthcare, industrial, and so on.). The future is right here and know-how behind brokers is prepared for us to make use of. At the identical time, we have to hold shut consideration to Responsible AI guardrails to ensure we aren’t constructing Skynet!